The Problem This Solved For Me in My HITL Experiment

For anyone doing extensive research with AI tools like Claude, Perplexity, or ChatGPT, you quickly accumulate large documents that need reviewing. Reading 5,000+ word research pieces on screen isn't always practical or, for that matter, good for your eyes or pleasurable, especially when you're mobile. The obvious solution would be commercial text-to-speech services, but they come with recurring subscription costs that add up quickly. Moreover, in some months, you may not use them.

What we've discovered through the HITL experiment is that with support from AI, it is now much easier to build your own microservices, like text-to-voice, at a negligible cost. After a month of heavy usage, the total bill was £1.40 - and this included everything in my project. In fact, running the massive-research.md (2,312 words) example file (download) about 8 times for recording this walkthrough resulted in the astronomical :-) cost of 0.01c or 0.000054c / word.

This also represents a broader HITL principle: getting closer to where compute actually happens rather than paying SaaS platform pricing for straightforward tasks.

This walkthrough shows you how to build a text-to-voice function from scratch using Google Cloud, explaining each step through the user interface rather than command-line instructions. The goal isn't just to give you a working service, but to demystify how these microservices work.

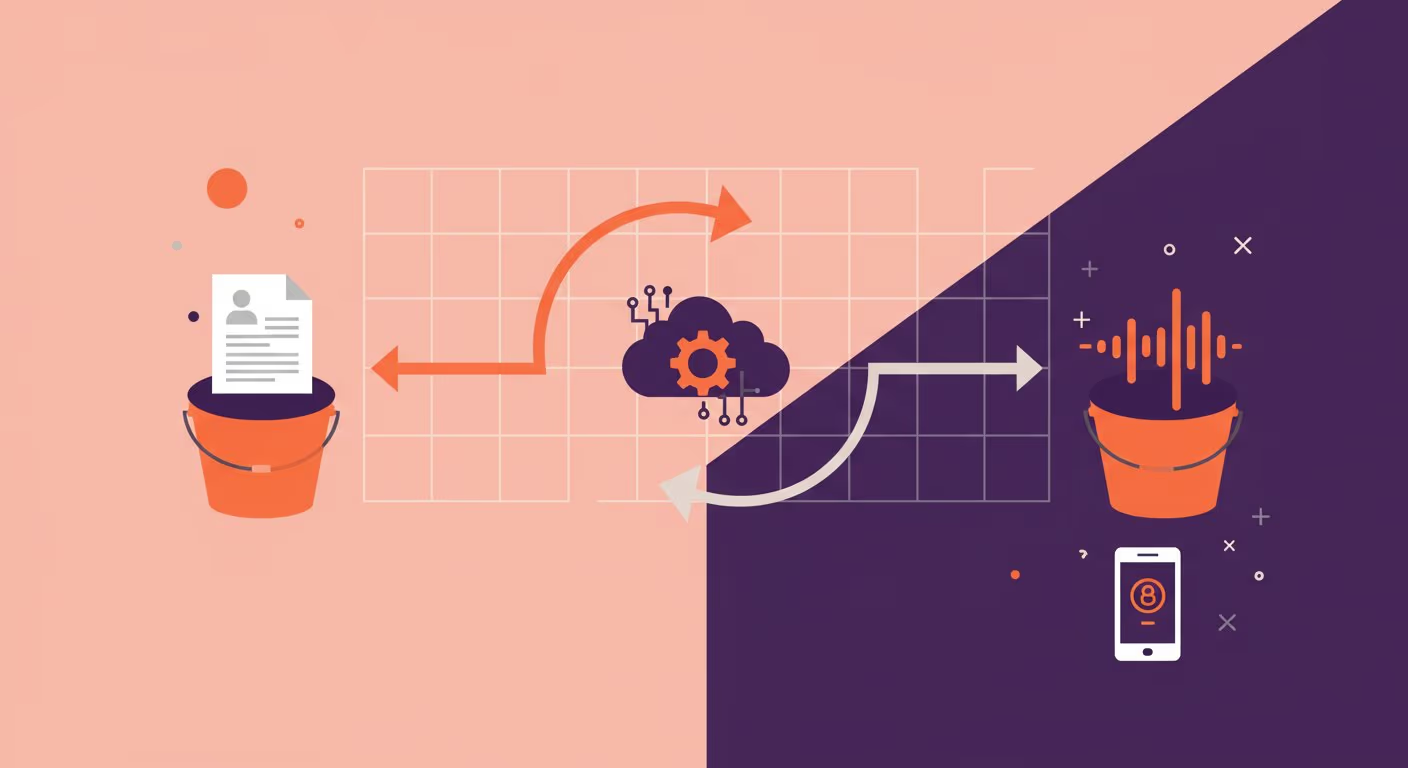

The Architecture: Why Two Buckets?

The system uses a simple trigger-based architecture:

- Input bucket - Where your markdown or text files land

- Cloud Function - Processes the file and converts text to speech

- Output bucket - Where your audio files are stored (publicly accessible)

By using two separate buckets, cleanup becomes trivial—simply delete everything periodically without affecting your function setup. The output bucket is deliberately public, allowing you to access your audio files from any device without authentication hassles.

Prerequisites

- A Google Cloud account (new accounts get $300 in credits)

- Basic familiarity with cloud services (though we'll walk through everything)

- Markdown or text files you want converted to audio, to test at the end of this

Step 1: Create a New Google Cloud Project

I started from scratch. You can add this to an existing project. Starting from fresh helped me to replicate the exact setup process without inheriting any existing configurations.

- Navigate to Google Cloud Console

- Create a new project

- Name it something meaningful (e.g., "text-to-voice")

- Wait for the project creation to complete

- Switch to your new project

HITL Note: Even if you have existing projects, creating a dedicated project for microservices helps me keep costs transparent and makes it easier to manage permissions down the line.

Step 2: Create Your Storage Buckets

Input Bucket (Private)

- Navigate to Cloud Storage > Buckets from the left-hand menu

- Click Create Bucket

- Name: text-input-md (or your preferred name)

- Region: Choose your preferred region (e.g., Europe)

- Storage class: Standard

- Access control: Prevent public access (leave default)

- Click Create

Output Bucket (Public)

- Create another bucket

- Name: my-voice-files (or your preferred name)

- Region: Same region as input bucket (important)

- Storage class: Standard

- Access control: Uncheck "Prevent public access"

- Click Create

Make Output Bucket Truly Public

The bucket needs one additional permission setting:

- Navigate to your output bucket

- Go to the Permissions tab

- Click Grant Access

- New principals: allUsers

- Role: Storage Object Viewer

- Confirm when prompted about public access

After refreshing the page, you should see the bucket marked as public. This allows you to click audio file links from anywhere—phone, tablet, desktop—without authentication.

Record These Names: You'll need both bucket names and your project ID for the function configuration.

Step 3: Enable Required APIs

Before creating the function, enable the necessary Google Cloud APIs:

Enable Eventarc API

- Search for Eventarc in the console

- Click Enable

- Wait for API activation

Enable Cloud Text-to-Speech API

- Search for Cloud Text-to-Speech API

- Click Enable

- Wait for API activation

Enable Cloud Functions API

(optional, as you will be prompted to do this automatically when setting up the function later)

- Search for Cloud Functions API

- Click Enable

- Wait for API activation

Why This Matters: These APIs allow your function to listen for file uploads (Eventarc) and perform the text-to-speech conversion (Cloud Text-to-Speech).

Step 4: Create the Cloud Function

Initial Function Setup

- Navigate to Cloud Run

- Click Create Service

- Select "Use inline editor to create a function"

- Function name: text-to-voice (or your preference)

- Region: Same region as your buckets (e.g., London for EU)

- Runtime: Python 3.13

Configure the Trigger

The trigger makes this function fire automatically when files arrive:

- Click Add Trigger

- Trigger type: Cloud Storage

- Event: On (finalizing/creating) file in the selected bucket

- Bucket: Select your input bucket (text-input-md)

- Service account: Default compute service account (simplest for now)

- Authentication: Require authentication (leave default)

Note: Using the default service account is fine for personal projects. Production systems should use custom service accounts with minimal permissions.

Save and Enable

- Click Save on trigger

- Click Create on the function

- Enable Cloud Functions API when prompted

The system will now create your function with example code, which we'll replace in the next step.

Step 5: Add the Function Code

Main Function Code

- In the Cloud Function editor, select the main.py tab

- Delete all existing code (Ctrl+A, then paste)

- Paste the following:

import functions_framework

from google.cloud import storage

from google.cloud import texttospeech

import re

import os

from datetime import datetime

# Configuration — edit the values inside the quotes only

INPUT_BUCKET = "<your-input-bucket-name-here>" # e.g. "my-text-input"

OUTPUT_BUCKET = "<your-output-bucket-name-here>" # e.g. "my-voice-files"

PROJECT_ID = "<your-gcp-project-id>" # e.g. "text-to-voice-123456"

def clean_markdown_for_tts(markdown_content):

"""

Clean markdown content for text-to-speech synthesis.

Removes or converts markdown formatting that would be read aloud awkwardly.

"""

if not markdown_content:

return ""

text = markdown_content

# Remove markdown headers (##, ###, etc.) - just keep the text

text = re.sub(r'^#{1,6}\s+', '', text, flags=re.MULTILINE)

# Remove markdown links but keep the text - [text](url) -> text

text = re.sub(r'\[([^\]]+)\]\([^\)]+\)', r'\1', text)

# Remove inline code backticks

text = re.sub(r'`([^`]+)`', r'\1', text)

# Remove code blocks

text = re.sub(r'```[\s\S]*?```', '', text)

# Remove bold/italic markdown but keep text

text = re.sub(r'\*\*([^\*]+)\*\*', r'\1', text) # **bold**

text = re.sub(r'\*([^\*]+)\*', r'\1', text) # *italic*

text = re.sub(r'__([^_]+)__', r'\1', text) # __bold__

text = re.sub(r'_([^_]+)_', r'\1', text) # _italic_

# Remove list markers but keep spacing

text = re.sub(r'^\s*[-\*\+]\s+', '', text, flags=re.MULTILINE) # Unordered lists

text = re.sub(r'^\s*\d+\.\s+', '', text, flags=re.MULTILINE) # Ordered lists

# Remove blockquote markers

text = re.sub(r'^\s*>\s*', '', text, flags=re.MULTILINE)

# Remove horizontal rules

text = re.sub(r'^[-\*_]{3,}\s*$', '', text, flags=re.MULTILINE)

# Clean up multiple newlines

text = re.sub(r'\n{3,}', '\n\n', text)

# Clean up multiple spaces

text = re.sub(r' {2,}', ' ', text)

# Strip leading/trailing whitespace

text = text.strip()

return text

def generate_output_filename(input_filename):

"""

Generate output filename in format: {md_title}_{dd-mm-yyyy}.wav

Note: Using .wav since Long Audio API output only works with LINEAR16

"""

# Remove .md extension and clean the filename

base_name = input_filename.replace('.md', '').replace('.txt', '')

# Clean filename for safe storage

safe_name = re.sub(r'[^a-zA-Z0-9\s\-_]', '', base_name)

safe_name = re.sub(r'\s+', '_', safe_name.strip())

# Add date suffix

today = datetime.now()

date_suffix = today.strftime("%d-%m-%Y")

return f"{safe_name}_{date_suffix}.wav"

@functions_framework.cloud_event

def process_tts_input(cloud_event):

"""

Cloud Function triggered by file upload to de-tts-input bucket.

Processes markdown files using Google Cloud Long Audio Synthesis.

"""

# Get event data

data = cloud_event.data

bucket_name = data['bucket']

object_name = data['name']

print(f"Processing file: gs://{bucket_name}/{object_name}")

# Verify it's from the input bucket

if bucket_name != INPUT_BUCKET:

print(f"Ignoring file from bucket: {bucket_name}")

return

# Only process .md or .txt files

if not (object_name.endswith('.md') or object_name.endswith('.txt')):

print(f"Ignoring non-markdown file: {object_name}")

return

try:

# Initialize clients

storage_client = storage.Client()

tts_client = texttospeech.TextToSpeechLongAudioSynthesizeClient()

# Read the input file

input_bucket = storage_client.bucket(INPUT_BUCKET)

blob = input_bucket.blob(object_name)

markdown_content = blob.download_as_text()

print(f"Downloaded {len(markdown_content)} characters from {object_name}")

# Clean markdown for TTS

cleaned_text = clean_markdown_for_tts(markdown_content)

if not cleaned_text.strip():

print("No text content after cleaning, skipping synthesis")

return

print(f"Cleaned text length: {len(cleaned_text)} characters")

# Generate output filename

output_filename = generate_output_filename(object_name)

output_gcs_uri = f"gs://{OUTPUT_BUCKET}/{output_filename}"

print(f"Output will be saved to: {output_gcs_uri}")

# Prepare TTS request

synthesis_input = texttospeech.SynthesisInput(text=cleaned_text)

# Voice configuration (matching the example)

voice = texttospeech.VoiceSelectionParams(

language_code="en-GB",

name="en-GB-Neural2-B"

)

# Audio configuration (matching the example)

audio_config = texttospeech.AudioConfig(

audio_encoding=texttospeech.AudioEncoding.LINEAR16

)

# Parent path for the request

parent = f"projects/{PROJECT_ID}/locations/global"

# Create the long audio synthesis request

request = texttospeech.SynthesizeLongAudioRequest(

parent=parent,

input=synthesis_input,

audio_config=audio_config,

voice=voice,

output_gcs_uri=output_gcs_uri,

)

print("Starting long audio synthesis...")

# Start the synthesis operation

operation = tts_client.synthesize_long_audio(request=request)

# Wait for completion (5 minutes timeout)

print("Waiting for synthesis to complete...")

result = operation.result(timeout=300)

print(f"Synthesis completed successfully!")

print(f"Audio file saved to: {output_gcs_uri}")

print(f"Operation result: {result}")

except Exception as e:

print(f"Error processing {object_name}: {str(e)}")

raise e

# For local testing

if __name__ == "__main__":

# Test the markdown cleaning function

sample_md = """

# Test Document

This is a **bold** statement and *italic* text.

## Section 2

- List item 1

- List item 2

Here's some `inline code` and a [link](https://example.com).

```python

def test():

return "code block"

```

> This is a blockquote

---

Final paragraph.

"""

cleaned = clean_markdown_for_tts(sample_md)

print("Original:")

print(sample_md)

print("\nCleaned:")

print(cleaned)

Configure Entry Point

In the Entry point field at the top, enter:

process_tts_input

This tells Cloud Functions which function to execute when triggered.

Requirements File

- Select the requirements.txt tab

- Delete existing content

- Paste the following:

functions-framework==3.*

google-cloud-storage==2.*

google-cloud-texttospeech==2.*Critical Configuration Variables

Before saving, update these three variables in the function code:

INPUT_BUCKET:

INPUT_BUCKET = "text-input-md" # Your input bucket name

OUTPUT_BUCKET:

OUTPUT_BUCKET = "my-voice-files" # Your output bucket name

PROJECT_ID:

PROJECT_ID = "your-project-id" # Found in URL: project=YOUR_ID

Finding Project ID: Look at your browser's URL bar—the project ID appears as project=xxxxx.

Deploy the Function

- Click Save

- Click Deploy

- Wait for deployment (this takes a few minutes)

Whilst deploying, the function is being packaged with all dependencies and configured to respond to your storage bucket trigger.

Understanding What the Function Does

The function performs several important tasks before converting text to speech:

Text Cleaning Process

Removes Markdown Formatting:

- Strips hash symbols (headers) so voice doesn't say "hash hash hash"

- Removes bold and italic formatting markers

- Cleans up bulleted list formatting

- Handles blockquotes appropriately

Whitespace Management:

- Removes excessive line breaks that create awkward pauses

- Maintains paragraph structure for natural speech flow

- Preserves emphasis where appropriate for better voice modulation

File Format Support: The function only processes files ending in .md or .txt, ensuring proper text handling.

Voice Configuration

The function uses Google's synthesize_long_audio API, which allows processing files much larger than the standard 5,000 character limit. Key settings:

If you want to change the “voice”, you can do so by modifying the part of the function shown below.

Language Code & Voice Selection:

voice = texttospeech.VoiceSelectionParams(

language_code="en-GB",

name="en-GB-Neural2-B"

)

With for example,

voice = texttospeech.VoiceSelectionParams(

language_code="en-US",

name="en-US-Neural2-C"

)You can find all the voices and codes in the Google Cloud Text-to-Speech documentation.

Output Format: The function outputs WAV files. Whilst MP3 would be more compressed, the long audio API currently only supports WAV in public beta. Given the minimal storage costs, this isn't a practical concern.

Why This Approach Works

By using the synthesize_long_audio API, the function can handle entire research documents in a single pass—no chunking, no stitching audio segments together. The initial version of this function was significantly more complex, breaking text into 5,000-character segments. The simplified version is more reliable and easier to maintain.

Step 6: Testing Your Function

Manual Upload Test

- Navigate to Cloud Storage > Buckets

- Open your input bucket (e.g. text-input-md)

- Click Upload Files

- Select a markdown or text file

- Wait for upload to complete

NOTE:

Apple users - The function validates by file extension, so make sure your test file has .md or .txt at the end. macOS sometimes hides extensions by default, which can cause the function to ignore your file.

What Happens Next:

- The upload triggers your function automatically

- Function processes the text file

- Converts to speech using Google's API

- Saves audio file to output bucket

Check the Results

- Navigate to your output bucket (e.g. my-voice-files)

- You should see a new .wav file

- Click the filename to access the public URL

- Click the URL to play the audio

The audio filename will be based on your input filename with .wav extension.

Common Issues and Solutions

Function Won't Deploy

Issue: Deployment fails with permission errors. Solution: Ensure all required APIs are enabled (Eventarc, Cloud Functions, Text-to-Speech)

Audio File Doesn't Generate

Issue: Upload succeeds, but no audio file appears. Solution:

- Check function logs in the Cloud Functions console

- Verify bucket names match exactly in the function code

- Confirm file format is .md or .txt, and make sure it has this as an extension.

Cannot Access Audio File

Issue: Audio file generates, but returns an access denied error. Solution: Verify output bucket has allUsers with Storage Object Viewer role.

Poor Audio Quality

Issue: Voice sounds wrong or pronunciation issues. Solution:

- Review text cleaning—ensure markdown formatting is properly stripped

- Check for special characters that need escape handling

- Consider adjusting voice parameters in function code

Practical Usage Patterns

Research Document Workflow

- Complete research in Claude/Perplexity

- Export as markdown

- Upload to input bucket

- Receive audio file

- Listen whilst walking/commuting

Regular Cleanup

The two-bucket system makes maintenance simple:

- Periodically delete old text files from input bucket

- Delete processed audio files from output bucket when no longer needed

- Function continues working without any reconfiguration

Mobile Access

Because the output bucket is public:

- Bookmark the bucket URL

- Access directly from mobile browser

- Stream audio files without downloading

- No authentication required

Next Steps: Automation

Whilst manual upload works perfectly well, the real power comes from automation:

Automated Upload via Make.com

Scenario: You complete research in Claude and want an automatic conversion. Solution: Create a Make.com scenario that:

- Receives markdown from Claude

- Uploads directly to the input bucket

- Triggers function automatically

[Note: Detailed Make.com integration will be covered in a separate walkthrough]

Notification When Complete

Scenario: Want to know when the audio file is ready Solution: Create a second Cloud Function that:

- Listens to the output bucket (like the first function listens to input)

- Triggers when an audio file is created

- Sends a notification email or message

Implementation Pattern:

- Create new function with trigger on output bucket

- Use SendGrid, Mailgun, or Cloud Functions to send email

- Include public URL to audio file in notification

Claude Integration

Scenario: Want Claude to handle the entire workflow Solution: Create Claude action that:

- Accepts markdown from conversation

- Uploads to input bucket via Make.com webhook

- Returns confirmation and estimated completion time

This removes all manual steps—you simply tell Claude to convert research to audio, and the entire process happens automatically.

The HITL Principle in Action

This walkthrough demonstrates a core HITL methodology principle: building capabilities that match your actual needs rather than paying for bundled features you don't use.

Commercial text-to-speech services charge £10-30/month because they're built for broad market appeal. Their costs cover:

- Universal UI/UX development

- Customer support infrastructure

- Marketing and sales operations

- Feature bloat for diverse use cases

For someone who needs a specific capability—converting research documents to audio—you can build exactly what you need for pennies rather than pounds.

The Broader Lesson: As AI tools make technical implementation more accessible, the question shifts from "can I build this?" to "should I buy this or build this?" For many focused use cases, building custom microservices makes more economic and operational sense than subscribing to general-purpose platforms.

What This Cost Structure Means

At £1.40/month for the entire project (not just this function), and 0.000054p per word for actual conversions, this service costs less than a cup of coffee. More importantly, costs scale with usage—if you don't use it for a few weeks, you pay almost nothing. Subscription services charge regardless of usage patterns.

Calculation for Context:

- Average commercial TTS service: £15/month

- This self-hosted approach: £1.40/month (entire project, heavy usage)

- Processing a 2,312-word document: 0.01p

- Annual savings: ~£163

- Break-even for setup time: Approximately 2 hours at a reasonable hourly rate

For independent operators building multiple similar microservices, the cumulative savings become significant whilst maintaining full control over functionality and data.

Conclusion

You now have a working text-to-voice service that:

- Costs virtually nothing to run

- Processes documents of any length

- Generates audio files accessible from anywhere

- Requires no ongoing maintenance

- Can be automated with other HITL tools

This represents a small but practical example of the HITL approach: identifying specific workflow needs, building targeted solutions using accessible cloud services, and avoiding subscription costs that don't align with actual usage patterns.

The video walkthrough shows the setup process in detail. This article provides the context for why this approach makes sense and how it fits into broader HITL methodology. Together, they should provide you with both the practical steps and the strategic understanding needed to implement similar microservices for other workflow needs.

Technical Requirements:

- Google Cloud account

- Basic understanding of cloud storage concepts

- Markdown or text files for conversion

Estimated Setup Time: 30-45 minutes for first-time setup, including API enablement and testing. 12mins if you are quick and Google is kind, no pressure.

The Broader Lesson: As AI tools make technical implementation more accessible, the question shifts from "can I build this?" to "should I buy this or build this?" For many focused use cases, building custom microservices makes more economic and operational sense than subscribing to general-purpose platforms.

Automating Markdown File Uploads to the Storage Bucket (Directly From Claude In this Example)

Once your text-to-voice function is operational, you can automate the process of sending markdown files from Claude directly to the storage bucket. It makes things more seamless if you're using Claude for extensive research.

Setting Up the Make.com Scenario

Step 1: Add the Google Cloud Storage Module

Search for "storage" in Make.com and select the Google Cloud Storage module. Choose "Upload an Object" (Make's terminology for uploading a file). Create a connection using your Google Cloud project credentials—Make provides detailed connection instructions in their documentation.

Step 2: Configure the Upload Module

- Project ID: Enter your Google Cloud project ID

- Bucket: Specify your target storage bucket (tip: uncheck the map toggle to see all available buckets)

- File Name: Leave as "for now" initially

- Data: Leave as "for now" initially

- Content Type: Leave blank

- Upload Type: Simple upload

Step 3: Set Up Input Variables

For Claude to interact with this scenario, add input variables that provide context:

- file_name: Description: "Name of this markdown or text file"

- file_data: Description: "Contents of this markdown/text file"

These descriptions help Claude understand what information to provide.

Step 4: Add Return Outputs

Configure a return output to send feedback to Claude:

- Add "Scenario Return Output" module

- Set return message to: "Success, you'll get an email"

Step 5: Configure Error Handling

Right-click the upload module and add an error handler:

- Add another "Scenario Return Output" module

- Map the error message to the return output so Claude receives meaningful error information

Step 6: Edit Scenario Description

Give your scenario a clear description explaining its purpose. For example: "Convert to voice: send markdown and file name. This action will send an email with the file and return the file." GPTs rely heavily on these descriptions to understand tool functionality.

Connecting to Claude via MCP

Step 1: Get Your MCP Connector

In Make.com:

- Navigate to Dashboard → Profile → API Access

- Copy the MCP connector code

Step 2: Add to Claude

Paste the MCP connector into Claude's settings. Your Make.com scenarios will now appear as available tools within Claude.

A short video of how to connect Claude to Make.com via MCP showing the steps above.

Using the Automation

Once configured, you can instruct Claude directly:

"Push this to the voice action command"

Claude will automatically:

- Send the markdown content to your Make.com scenario

- Upload the file to your storage bucket

- Trigger the text-to-voice cloud function

- Send you an email notification when complete

Alternative Upload Methods

The storage bucket trigger approach offers flexibility. You can also:

- Google Drive Integration: Create a Make.com scenario that watches a specific Google Drive folder, automatically uploading any files dropped there to the storage bucket

- Manual Upload: Continue using the Google Cloud Console for one-off uploads

- Other AI Platforms: Connect Perplexity, OpenAI, or other services using similar Make.com scenarios

Fundamentally, the advantage of how this function works is that as soon as the files reach the storage bucket, the entire text-to-voice workflow executes automatically, regardless of how the file arrived there, so you can automate the arrival from many different sources to help your HITL Personal Productivity.